When: April 18th 2015

Where: Imperial College London, Skempton building, 201

Programme

10:00: Coffee

10:25: Introduction

10:30 Keynote: Alex Heinecke, Intel Parallel Computing, Santa Clara

“Fighting B/F Ratios in Scientific Computing by Solving PDEs in High Order“

11:30 Fabio Luporini, Imperial College London

“An algorithm for the optimization of finite element integration loops“

12:00 David Moxey, Imperial College London

“Optimizing the performance of the spectral/hp element method with collective linear algebra operations“

12:30 Lunch buffet with posters

14:00 Keynote: Karl Rupp

“FEM Integration with Quadrature and Preconditioners on GPUs“

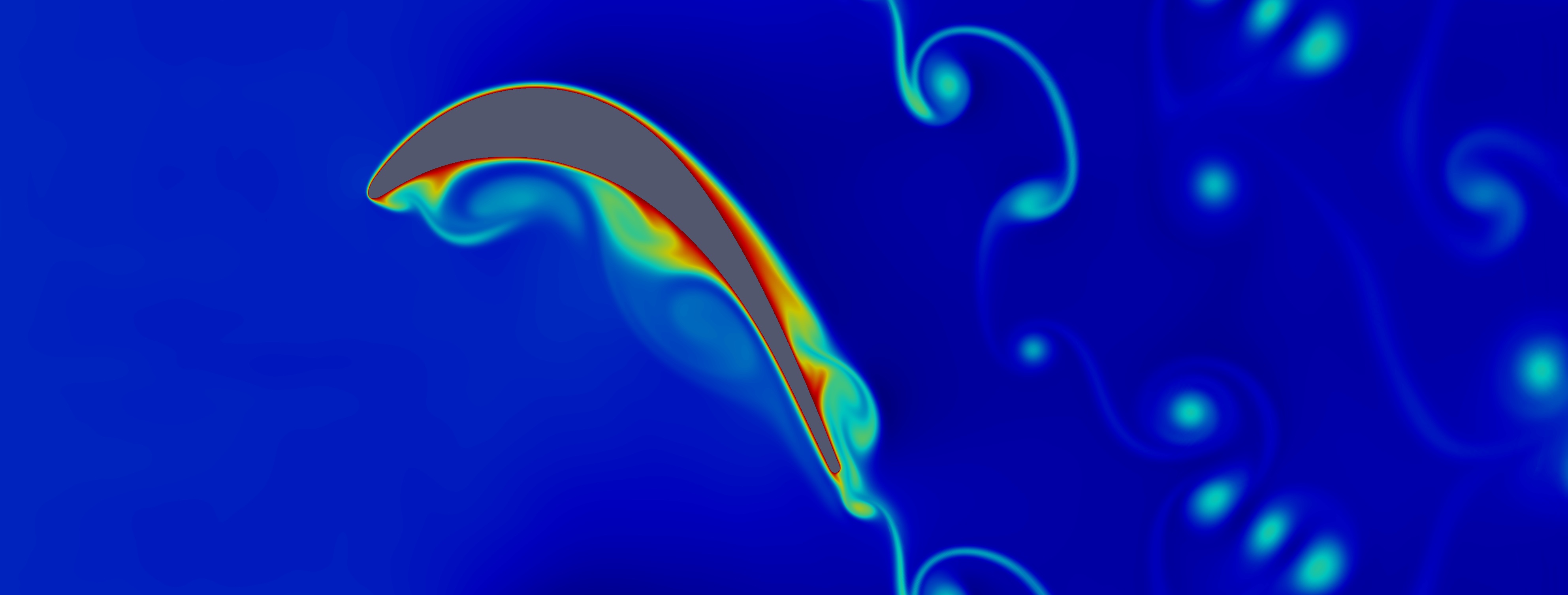

15:00 Peter Vincent, Imperial College London

“PyFR: An Open Source Python Framework for High-Order CFD on Heterogeneous Platforms”

15:45: Discussion: Implicit vs explicit methods on accelerators.

Keynote Speakers

Alexander Heinecke

Fighting B/F Ratios in Scientific Computing by Solving PDEs in High Order

Today’s and tomorrow’s architectures follow a common trend: wider vector instructions which offer denser arithmetic intensity, but constant and therefore relatively lower bandwidth. When solving PDEs, high-order methods are a possible candidate for adopting to this hardware development. Their computing cost increases with higher order due to the higher arithmetic intensity, while relatively reducing the required memory bandwidth. Therefore, they offer an adjustable trade-off between the computational costs, required bandwidth and the accuracy delivered per degree of freedom. In this talk we examine the impact of convergence order, clock frequency, vector instruction sets, alignment and chip-level parallelism for higher order discretization on their time to solution, more precisely their time to accuracy, with respect to yesterday’s, today’s and tomorrow’s CPU architectures. From a performance perspective, especially on state-of-the-art and future architectures, the shift from a memory- to a compute-bound scheme and the need for double precision arithmetic with increasing order describes a compelling path for modern PDE solvers.

Alexander Heinecke studied Computer Science and Finance and Information Management at Technische Universität München, Germany. In 2010 and 2012, he completed internships at Intel in Munich, Germany and at Intel Labs Santa Clara, CA, USA. In 2013 he completed his Ph.D. studies at TUM and joined Intel’s Parallel Computing in Santa Clara in 2014. His core research topic is the use of multi- and many-core architectures in advanced scientific computing applications.

Awards: Alexander Heinecke was awarded the Intel Doctoral Student Honor Programme Award http://blogs.intel.com/intellabs/2012/10/25/intel-honors-9-faculty-and-25-doctoral-students-with-awards-at-intel%E2%80%99s-european-research-and-innovation-conference-eric/ in 2012. In Nov. 2012 he was part of a team which placed the Beacon System #1 on the Green500 list. In 2013 and 2014 he and his co-authors received the PRACE ISC Award for achieving peta-scale performance in the fields of molecular dynamics and seismic hazard modelling on more than 140,000 cores. In 2014, he and his co-authors were additional selected as Gordon Bell finalists for running multi-physics earthquake simulations at multi-petaflop performance on more than 1.5 million of cores.

Karl Rupp

FEM Integration with Quadrature and Preconditioners on GPUs

Efficient integration of low-order elements on a GPU has proven difficult. Former work has shown how to integrate a differential form (such as Laplace or elasticity) efficiently using algebraic simplification and exact integration. This, however, breaks down for multilinear forms or when using a coefficient. In this talk, I present results from joint work with M. Knepley and A. Terrel on how to efficiently integrate an arbitrary form using quadrature. The key is a technique we call “thread transposition” which matches the work done during evaluation at quadrature points to that done during basis coefficient evaluation. We are able to achieve more than 300GF/s for the variable-coefficient Laplacian, and provide a performance model to explain these results.

The second part of the talk discusses performance aspects of preconditioners for GPUs, in particular algebraic multigrid. While the preconditioner application maps well to the fine-grained parallelism provided by GPUs, our benchmarks indicate that GPUs have to be paired with powerful CPUs to obtain best performance.

Karl Rupp holds master’s degrees in microelectronics and in technical mathematics from the TU Wien and completed his doctoral degree on deterministic numerical solutions of the Boltzmann transport equation in 2011. During his doctoral studies, he started several interacting free open source projects, including the GPU-accelerated linear algebra library ViennaCL. After a one-year postdoctoral research position working with the PETSc-team at the Argonne National Laboratory, USA, and a research project on improving the efficiency of semiconductor device simulators at TU Wien, he is now a freelance scientist. His current activities include the GPU-acceleration of large-scale geodynamics simulations.

Register here.